"Intelligent design" (ID) is the assertion that there is evidence that major features of life have been brought about, not by natural selection, but by the action of a designer. This involves negative arguments that natural selection could not possibly bring about those features. And the proponents of ID also claim positive arguments.

Critics of ID commonly argue that it is not science. For its positive predictions of the behavior of a designer they have a good point. But not for its negative criticisms of the effectiveness of natural selection, which are scientific arguments that must be taken seriously and evaluated. Look at Figure 1, which shows a cartoon design from T-shirts sold by an ID website, Access Research Network, which also sells ID paraphernalia (I am grateful to them for kind permission to reproduce it).

(click here for image)

Figure 1. A summary of the major arguments of "intelligent design", as they appear to its advocates, from Access Research Network's website http://www.arn.org. Merchandise with the cartoon is available from http://www.cafepress.com/accessresearch. Copyright Chuck Assay, 2006; all rights reserved. Reprinted by permission.

As the bulwark of Darwinism defending the hapless establishment is overcome, note the main lines of attack. In addition to recycled creationist themes such as the Cambrian Explosion and cosmological arguments about the fine-tuning of the universe, the ladder is Michael Behe's argument about molecular machines (Behe 1996). The other main attack, the battering ram, is the "information content of DNA" which is destroying the barrier of "random mutation".

The "irreducible complexity of molecular machines" arguments of Michael Behe have received most of the publicity; William Dembski's more theoretical arguments involving information theory have been harder for people to understand. There have been a number of extensive critiques of Dembski's arguments published or posted on the web (Wilkins and Elsberry 2001; Godfrey-Smith 2001; Rosenhouse 2002; Schneider 2001, 2002; Shallit 2002; Tellgren 2002; Wein 2002; Elsberry and Shallit 2003; Edis 2004; Shallit and Elsberry 2004; Perakh 2004a, 2004b; Tellgren 2005; Häggström 2007). They have pointed out many problems. These range from the most serious to nit-picking quibbles.

In this article, I want to concentrate on the main arguments that Dembski has used. With a few exceptions, many of the points I will make have already been raised in these critiques of Dembski — this is primarily an attempt to make them more accessible.

Digital codes

Stephen Meyer, who heads the Discovery Institute's program on ID, describes Dembski's work in this way:

We know that information — whether, say, in hieroglyphics or radio signals — always arises from an intelligent source. .... So the discovery of digital information in DNA provides strong grounds for inferring that intelligence played a causal role in its origin. (Meyer 2006)

What is this mysterious "digital information"? Has a message from a Designer been discovered? When DNA sequences are read, can they be converted into English sentences such as: "Copyright 4004 bce by the intelligent designer; all rights reserved"? Or can they be converted into numbers, with one stretch of DNA turning out to contain the first 10 000 digits of π? Of course not. If anything like this had happened, it would have been big news indeed. You would have heard by now. No, the mysterious digital information turns out to be nothing more than the usual genetic information that codes for the features of life, information that makes the organism well-adapted. The "digital information" is just the presence of sequences that code for RNA and proteins — sequences that lead to high fitness.

Now we already knew that they were there. Most biologists would be surprised to hear that their presence is, in itself, a strong argument for ID — biologists would regard them as the outcome of natural selection. To see them as evidence of ID, one would need an argument that showed that they could only have arisen by purposeful action (ID), and not by selection. Dembski's argument claims to establish this.

Specified Complexity

How does his argument work? Dembski (1998, 2002, 2004) first sets forth an Explanatory Filter to detect design. To make a longish story short, it concludes in favor of design whenever it finds Specified Complexity. He requires that the information in question be complex, so that the probability of that DNA sequence's occurring by chance would be less than 1 in 10150. Dembski chooses this value to avoid any possibility that the sequence would arise even once in the history of the universe. If this complexity were the only issue, his argument could instantly be dismissed: any random sequence of 250 bases would be about as improbable as this. Similarly, any random five-card hand in a card game has a chance of only one in 2 598 960 and this rare an event occurs every time we deal, so that the rarity is not a cause for concern.

This is where the "specified" part comes in. Dembski requires that the information also satisfy a requirement that makes it meaningful. He illustrates this with a variety of analogies having different kinds of meaning. In effect, he is saying that the relevant quantity is the probability that a random sequence of DNA is as meaningful as the one observed.

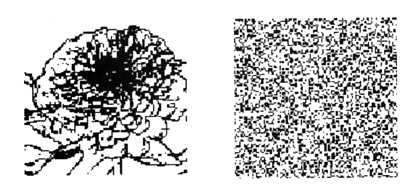

Figure 2. Two 101x100 pixel images, each with 3511 black pixels and the rest white. Both have equal information content. Which one has specified complexity, as judged by its resemblance to an image of a flower?

The image on the left of figure 2 shows an example. It is a 101-by-100–pixel image. If our specification were, say, that the image be very much like a flower, the image on the left would be in contention (not surprisingly, as it started as a digital photograph of a zinnia). Of all the possible arrangements of 10 100 black-and-white pixels, it is among the tiny fraction for which the images are much like a flower. There are 210100 possible such images of this size, which is about 103040, a vast number. We do not know how many of these would look as like, or more like, a flower than this, but suppose that it is not greater than 10100. That means that, if we choose an image randomly from all possibilities, the probability that an image would look this much (or more) like a flower is less than 10100/103040, which is 10-2940.

The image on the right would not be in contention in any contest for images that looked like a flower. Like the left image, it has 3511 black pixels, but they seem to be arranged randomly. Both images have the same information content (10 100 bits), but the image on the left looks like a flower. It not only has information, it has information that is specified by being in a flower-like arrangement. This is a useful distinction, which Dembski attributes to Leslie Orgel. I cannot resist adding that a related concept, "adaptive information", appears in one of my own papers, perhaps the one least frequently cited (Felsenstein 1978).

Sequences in the genome that code for proteins and RNAs, and associated regulatory sequences, have specified information. Although Dembski (2002: 148) mentions a number of possible different criteria, the one that will concern us here is fitness. Sequences contain information that makes the organism well adapted if it has high fitness, and the specified information will be judged by the fraction p of all possible sequences that would have equal or higher fitness.

(Dembski also defines specified information in another way — using concepts from algorithmic information theory and saying that information is specified if it can be described simply. A perfect sphere would then be more strongly specified than an actual organism. But this has nothing to do with fitness or with explaining adaptation. I will concentrate here on explaining adaptation.)

Specified complexity does one thing — when it is observed, we can be sure that purely random processes such as mutation are highly unlikely to have produced that pattern, even once in the age of the universe. But can natural selection produce this specified complexity? Dembski argues that it cannot — that he can show that these strongly nonrandom patterns cannot be designed by natural selection.

To support that argument, Dembski makes two main arguments. The first involves a Law of Conservation of Information — he argues that it prevents the process of natural selection from increasing the amount of adaptive information in the genome. The second uses the No Free Lunch theorem to argue that search by an evolutionary algorithm cannot find well-adapted genotypes. Let us consider these in turn.

Conservation of Information

For his concept of the Law of Conservation of Information, Dembski points to a law stated by the late Peter Medawar. In its clearest form it states that a deterministic and invertible process cannot alter the amount of information in a sequence. If we have a function that turns one DNA sequence X into another one Y, and if this function is invertible, then there is also a reverse function that can recover the original sequence X from the sequence Y. Any information that was present in the original sequence cannot have been lost, as we can get the original sequence back.

This is fairly obviously true. For example, if we take the picture of the flower above, and scramble the order of its pixels, we destroy its resemblance to a flower. But if we did so using, say, a computer random number generator (a pseudorandom number generator) to make a permutation of the pixels, we could record the permutation we used, and use it at any time to unscramble the picture. The original information is conserved, because it has been hidden by the scrambling, but not really lost.

Does this mean that such a process cannot increase or decrease the amount of information in the genome? Yes, if we simply mean information, but no, if we mean specified information. Here I am disagreeing with Dembski on a critical point. In his reformulation of Medawar's theorem "the complex specified information in an isolated system of natural causes does not increase" (Dembski 2002: 169). Note that he is discussing not simply information, but specified information. Now look again at the pixelated flower. I said that the second figure had the same number of black pixels, distributed randomly. The reason I knew this is that the second picture is simply the first picture with its pixels scrambled. I generated the permutation using a pseudorandom random number generator and can easily tell you how to generate it yourself, so that you can do the scrambling yourself and get exactly the same result, and you can also make the tables needed to unscramble the picture. So no information was lost.

But the amount of specification certainly was lost. The second picture would be instantly rejected from any "like a flower" contest. When we use the permutation to unscramble the picture, we create a large amount of specification by rearranging the random pixels into a flowerlike form. We blatantly violate Dembski's version of Medawar's theorem.

Dembski's proof

Why am I saying this, when Dembski does sketch a proof of his Law of Conservation of Specified Complexity? How can he have proven the impossible? He does this by changing the specification. If the original permutation, from the first picture to the second, is called F, we can call the reverse permutation, the one that converts the second picture back into the first, G. Dembski's argument points out that the first picture has the specification "like a flower". The second picture has an equivalent specification: "when permuted by G, like a flower". For every picture that is more like a flower than the first picture, there is one that we would get when applying the permutation F to it. That permuted picture will of course satisfy the second specification to the same extent in that, when permuted back by G, it too is more like a flower. So both pictures have specifications that are equally strong, and that is the essence of Dembski's proof. Dembski's proof has been strongly criticized by Elsberry and Shallit (2003; Shallit and Elsberry 2004), who pointed out that it violates a condition that the specification has to be produced from "background information", and thus has to be independent of the transformations F and G. The specification of G is not. But even if their criticism of Dembski's proof were dismissed, and Dembski's proof accepted as correct, in any case Dembski's proof is completely irrelevant. We want to explain how DNA sequences come to contain information that makes the organism highly fit (by coding for adaptations). The specification that should interest us is this one: "codes for an organism that is highly fit". Dembski is applying his proof by arguing that it shows that no random or deterministic function can increase the specified information in a genome. The permutations I have been using as examples are deterministic functions, and his theorem would apply to them. If a genome codes for a highly fit organism, so that it satisfies the specification, when it is permuted it does not satisfy it. The scrambled genome is dreadfully bad at coding for a highly fit organism. And when we use the unscrambling permutation G on it, we create the specification of the information, for this original specification which uses fitness.

The flaw in Dembski's argument is that, to test the power of natural selection to put specified information into the genome, we must evaluate the same specification ("codes for an organism that is highly fit") on it before and after. If you could show that the scrambled picture and the unscrambled picture do equally well in satisfying that same specification, you would go far to prove that natural selection cannot put adaptive information into the genome. Our flower example shows that there is a big difference in whether the original specification is satisfied before and after the permutation. Scrambling the sequence of a gene may not destroy its information content, if we have used a known permutation that can later be undone. But the scrambling certainly will destroy the functioning, and thus the fitness, of the gene. Likewise, unscrambling it can dramatically increase the fitness of the gene. Thus Dembski's argument, in its original form, can be seen to be irrelevant. And when put into a meaningful form by requiring that the specification we evaluate is the same one before and after, the example presented here shows his argument to be wrong.

Generating specified information

Evolution does not happen by deterministic or random change in a single DNA sequence, but in a population of individuals, with natural selection choosing among them. The frequencies of different alleles change. Considering natural selection in a population, we can clearly see that a law of conservation of specified information, or even a law of conservation of information, does not apply there.

If we have a population of DNA sequences, we can imagine a case with four alleles of equal frequency. At a particular position in the DNA, one allele has A, one has C, one has G, and one has T. There is complete uncertainty about the sequence at this position. Now suppose that C has 10% higher fitness than A, G, or T (which have equal fitnesses). The usual equations of population genetics will predict the rise of the frequency of the C allele. After 84 generations, 99.9001% of the copies of the gene will have the C allele.

This is an increase of information: the fourfold uncertainty about the allele has been replaced by near-certainty. It is also specified information — the population has more and more individuals of high fitness, so that the distribution of alleles in the population moves further and further into the upper tail of the original distribution of fitnesses.

The Law of Conservation of Information has not considered this case. Even though the equations of change of gene frequencies are deterministic and invertible, when the gene frequencies are taken into account there is no law of conservation of information. The amount of information changes as the gene frequencies change (it can go either up or down, depending on the case). The specified information as reflected by the fitness does obey a law — in this simple case fitness constantly increases, as a result of the action of natural selection. So the only law we have is one that does predict the creation of specified information by natural selection. One might object that we have not actually created specified complexity because the increase in information has been only 2 bits, rather than the 500 bits (150 decimal digits) which is Dembski's minimum requirement for specified complexity. But what we have done is to describe the action of the mechanism that creates specified information — if this acts repeatedly at many places in the gene, specified complexity would arise. Thus one of the two main arguments used by Dembski can be seen to be wrong, when we consider a population.

No Free Lunch?

The second pillar of Dembski's argument is his use of the No Free Lunch theorem. This gave his 2002 book its title, and Dembski (2002: xix) declares the chapter on this to be "the climax of the book". The theorem was invented by computer scientists (Wolpert and Macready 1997) who were concerned with the effectiveness of search algorithms. It is worth giving a simple explanation of their theorem in the context of a simple model of natural selection. Imagine a space of DNA sequences that has to be searched. Suppose that the sequences are each 1000 bases long. There are 4 x 4 x 4 x … x 4 = 41000 possible sequences, which in alphabetic order would go from AAAA...A to TTTT...T. Now imagine that our organism is haploid, so that there is only one copy of the gene per individual, and suppose that each of these sequences has a fitness. A very tiny fraction of the sequences is functional, and almost all of the rest have fitness zero.

Suppose that we want to find an organism of high fitness, and we want to do so by looking at 10 000 different DNA sequences. The best we can do, of course, is to take the highest one we find among these. Now note that 41000 is about 10602, a number far greater than the number of elementary particles in the universe. It is not unreasonable to guess that the fraction of DNA sequences that has a nonzero fitness is tiny — let's be very generous and say 1 in 1020.

One way we could search would be at random. Pick one of the DNA sequences, then pick another completely at random, then another completely at random, and continue on until 10 000 different ones have been examined. As we are picking at random, each pick has essentially one chance in 1020 of finding a sequence with nonzero fitness. It should immediately be apparent that we have almost no chance of finding any sequence with nonzero fitness. In fact we have less than one chance in 1016. So a completely random search is a really terrible way to increase fitness — it will overwhelmingly often find only sequences that cannot survive. In effect, it is looking for a needle in a haystack, and failing.

Of course, evolution does not do a completely random search. A reasonable population genetic model involves mutation, natural selection, recombination and genetic drift in a population of sequences. But we can make a crude caricature of it by having only one sequence, and making, at each step, a single mutational change in it. If the change improves the fitness, the new sequence is accepted. Suppose that we continue to do this until 10 000 different sequences have been examined. We will end with the best of those 10 000.

Will this do better? In the real world, it will if we start from a slightly good sequence. Each mutation carries us to a sequence that differs by only one letter. These tend to be sequences that are somewhat lower, or sometimes somewhat higher, in fitness. On average they are lower, but the chance that one reaches a sequence that is better is not zero. So there is some chance of improving the fitness, quite possibly more than once. A fairly good way to find sequences with nonzero fitnesses is to search in the neighborhood of a sequence of nonzero fitness.

The No Free Lunch (NFL) theorem states that if we consider the list of all possible sequences, each with a fitness written next to it and if we average over all the ways that those fitnesses could be assigned to the sequences, then no search method is better than any other. We are averaging over all the orders in which we could write the fitnesses down next to the list of sequences. Almost all of these orders are just like random associations of fitnesses with genotypes. That means that search by genetic mutation could not do any better than a hopelessly bad method such as complete random choice of sequences. The NFL theorem considers all the different ways fitness could be associated with genotype. The vast number of those are like random scramblings. For those assignments of fitnesses to genotypes, when we mutate a sequence by even one base, the fitness of the new sequence is the same as it would be if it were drawn at random from among all other possible sequences.

This randomization destroys all hope of finding a better fitness by mutating. Each single-base mutation is then just as bad as changing all of the bases simultaneously. It is as if we were on the side of a mountain and took one step. In the real world, this would carry us a bit up or a bit down (though sometimes over a cliff). In the No Free Lunch world, it would carry us to the altitude of a random spot on the globe, and that would most often plunge us far downward. In sequence space the prospects are even more gloomy than on the globe, as all but an extremely tiny fraction of sequences have fitness zero, and thus they have no prospects.

The NFL theorem is correct, but it is not relevant to the real world of evolution of genomes. This point has been overlooked in some responses to Dembski's use of the theorem. For example, H Allen Orr in The New Yorker (Orr 2005) and David Wolpert in a review of Dembski's book (Wolpert 2003) both argue against Dembski by pointing out phenomena such as coevolution that are not covered by the NFL theorem. In effect, they are conceding that for simple sequence evolution, the NFL theorem rules out adaptation by natural selection. In arguing this way, they are far too pessimistic about the capabilities of simple sequence evolution. They have overlooked the NFL theorem's unrealistic assumptions about the random way that fitnesses are associated with genotypes, which in effect assumes mutations to have disastrously bad fitness.

Mutations

In the real world, mutations do not act like this. Yes, they are much more likely to reduce fitness than to increase it, but many of them are not lethal. I probably carry one — I have a strong aversion to lettuce, which to me has a bitter mineral taste. This is probably a genetic variation in one of my odorant receptor genes. It makes salad bars problematic, and at sandwich counters I spend a lot of time scraping the lettuce off. But it has not killed me — yet. The great body of empirical information about the effects of mutation in many organisms makes it clear that a great many mutations are not instantly lethal. They do on average make things worse, but they do not plunge us instantly back into the primordial organic soup.

In Dembski's NFL argument a single base change would have the same effect, on average, as changing all the bases in the gene simultaneously. A single amino acid substitution in a protein would have the same effect as replacing the whole protein by a random string of amino acids. This would make the protein totally inactive. That changes of a single base or a single amino acid do not have this sort of effect is strong evidence that mutations are much more likely to find another almost-functional sequence nearby. The real fitness landscape is not a scrambled "needle-in-a-haystack" landscape in which a sequence of moderately good fitness is surrounded only by sequences whose fitness is zero. In the real world, genotypes near a moderately good one often have moderately good fitnesses.

Empirical evidence

Note that if Dembski's arguments were valid, they would make adaptation by natural selection of any organism, in any phenotype, essentially impossible. For that would require adaptive information to be encoded into the genome by natural selection. According to Dembski's argument we would not need to worry: bacteria infecting a patient could not evolve antibiotic resistance. Human immunodeficiency viruses (HIV) would not become resistant to drugs. Insects would not evolve resistance to insecticides. Dembski's designer would be busy indeed: he would need to design every last adaptation, leaving out only a few that might be purely accidental.

Dembski himself seems unable to draw this self-evident conclusion from his own argument. He acknowledges that "the development of antibiotic resistance by pathogens via the Darwinian mechanism is experimentally verified and rightly of great concern to the medical field" (Dembski 2002: 38). But by saying that he undercuts his own argument — if correct, his argument would actually prove that the adaptive information in the bacterial genome could not be created by natural selection, except by the pure accident of mutation and genetic drift, unaided by natural selection.

His argument will also be news to animal and plant breeders. They use simple forms of artificial selection such as breeding from the individuals that have the best phenotypes. These forms of selection are like natural selection in that they do not use detailed information about individual genes — they do not require a particular detailed design. Dembski's argument implies that the breeders' efforts are in vain. They cannot create changes of phenotype by artificial selection, as this should be as ineffective as natural selection. Artificial selection provided Darwin with such powerful examples that he opened his book with an entire chapter on "Variation Under Domestication" in which he discussed case after case of changes due to artificial selection, but Dembski does not discuss artificial selection at all, mentioning it only once, in passing (in Dembski [2004] it is on page 311).

Smuggling?

Dembski (2002, sections 4.9 and 4.10) is not unaware of arguments that smoother fitness surfaces than the needle-in-a-haystack ones would allow natural selection to be effective. For example, Richard Dawkins (1996) has a computer program to demonstrate the effectiveness of selection, which evolves a meaningless jumble of 28 letters into the phrase "Methinks it is like a weasel" by repeatedly mutating letters randomly and then accepting those offspring sequences that most closely match the target phrase. Each match improves the fitness, so that mutations that make the phrase closer are readily available. Dembski argues, however, that the information in the resulting phrase is not created by the natural selection — it is already there, in the target phrase. He calls this the "displacement problem" (2002, section 4.7).

But invariably we find that when specified complexity seems to be generated for free, it has in fact been front-loaded, smuggled in, or hidden from view. (Dembski 2002: 204)

Computer demonstrations of the power of natural selection to bring about adaptation do often have detailed targets that natural selection is to approach. It is easier to write the programs that way. In real life, the objective is higher fitness, and achieving that means having the organism's phenotype interact well with real physics, real chemistry, and real biology.

In these more real cases, the environment does not provide the genome with exact targets. Consider a population of deer being preyed upon by a population of wolves. We have little doubt that mutations among the deer will cause changes in the lengths of their limbs, the strength of their muscles, the speed of reaction of their nervous system, the acuity of their vision. Some of these will enable the deer to escape the wolves better, and those ones will tend to spread in the population. The result is a change in the design of the deer. But this information is not "smuggled in" by the wolves. They simply chase the deer — they do not evaluate their match to detailed pre-existing design specifications.

There have been computer simulations that mimicked this process. The most fascinating is that of Karl Sims (1994a, 1994b, 1994c), whose simulation evolves virtual creatures that swim or hop in intriguing and somewhat unpredictable ways. The creatures are composed of connected blocks that can move relative to each other, and they are selected only for effective movement without screening for any details of the design. All that is required is genotypes, phenotypes, some interaction between the phenotypes and an environment, and natural selection on one property — speed. There is no "smuggling". A similar simulation inspired by Sims's is Jon Klein's (2002) breve program, available for download.

Evolvability

Dembski makes another argument about the shape of the fitness function itself. If it is smooth enough to allow evolution to succeed, he argues that this is the result of more smuggling:

But this means that the problem of finding a given target has been displaced to the new problem of finding the information j capable of locating that target. ... To say that an evolutionary algorithm has generated specified complexity within the original phase space is therefore really to say that it has borrowed specified complexity from a higher-order phase space ... it follows that the evolutionary algorithm has not generated specified complexity at all but merely shifted it around. (Dembski 2002: 203)

He is arguing that the fitness surface itself must have been specially chosen out of a vast array of possibilities, and that this means that one started with the specified complexity already present. He is saying that the smoothness of real fitness functions is not typical — that without a large input of specified information one would be dealing instead with needle-in-a-haystack fitness functions where natural selection could not succeed.

Now, it is possible to have natural selection alter the fitness function. There is a small literature on the "evolution of evolvability". Altenberg (1995) showed a computer simulation where natural selection causes the extent of interaction among genes to become less, so that the genotypes tend to become ones that have a smoother fitness function.

But even this may not be necessary. Different genes often act in ways separated in space and time, and that reduces the chance of their interacting. A mutant affecting one's eye pigment typically does not interact with a mutant at a different gene affecting the bones in one's toe. That isolation does not require any special explanation. But in a world that has a needle-in-a-haystack fitness function everything interacts strongly with everything else.

In effect, that world has everything encrypted. If you get a password or a lock combination partially correct, you do not partly access the computer account or partly open the safe. The computer or the safe does not react to each change by saying "hotter" or "colder". Each digit or letter interacts with each other, and nothing happens until all of them are correct. But this encryption is not typical of the world around us. Password systems and combination locks must be carefully designed to be secure — and this design effort can fail.

The world we live in is not encrypted. Most parts of it interact very little with other parts. When my family leaves home for a vacation, we have to make many arrangements at home concerning doors, windows, lights, toilets, faucets, thermostats, garbage, notifying neighbors, stopping delivery of newspapers, and so on. If we lived in Dembski's encrypted universe, this would be impossible. Every time we changed the thermostat setting, the windows would come unlocked and the faucets would run. Every time we closed a window, the newspaper delivery would resume, or a neighbor would forget that we were leaving. (It's worse than that, in fact. The house would be totally destroyed.) But, as we live in the real universe, we can cheerfully set family members to carrying out these different tasks without their worrying about each other's actions. The different parts of the house scarcely interact.

Of course a house is a designed object, but it is not particularly hard to have its parts be almost independent. When architects train, they do not have to spend much of their time ensuring that the doors, when closed, will not cause the faucets to run.

We live in a universe whose physics might be special, or might be designed — I wouldn't know about that. But Dembski's argument is not about other possible universes — it is about whether natural selection can work to create the adaptations that we see in the forms of life we observe here, in our own universe, on our own planet. And if our universe seems predisposed to smooth fitness functions, that is a big problem for Dembski's argument.

Bibliographic note: Dembski's critics

Of the major arguments here, two are, I believe, my own. One is the argument that Dembski's Law of Conservation of Complex Specified Information could not succeed in proving that information cannot be generated by natural selection, because his Law requires us to change the specification to keep the amount of specified information the same. The other is the argument that changes of gene frequency by natural selection can increase specified information. The other major arguments will be found in some of the papers I cited. In particular, the argument that the No Free Lunch theorem does not establish that natural selection cannot do better than pure random search was also made by Wein 2002, Rosenhouse 2002, Perakh 2004b, Shallit and Elsberry 2004, Tellgren 2005, and Häggström 2007.

In conclusion

Dembski argues that there are theorems that prevent natural selection from explaining the adaptations that we see. His arguments do not work. There can be no theorem saying that adaptive information is conserved and cannot be increased by natural selection. Gene frequency changes caused by natural selection can be shown to generate specified information. The No Free Lunch theorem is mathematically correct, but it is inapplicable to real biology. Specified information, including complex specified information, can be generated by natural selection without needing to be "smuggled in". When we see adaptation, we are not looking at positive evidence of billions and trillions of interventions by a designer. Dembski has not refuted natural selection as an explanation for adaptation.

Acknowledgments

I wish to thank Joan Rudd, Erik Tellgren, Jeffrey Shallit, Tom Schneider, Mark Perakh, Monty Slatkin, Lee Altenberg, Carl Bergstrom, and Michael Lynch for helpful comments. Dennis Wagner at Access Research Network kindly gave permission for use of the wonderful cartoon "The Visigoths are Coming". Work on this paper was supported in part by NIH grant GM071639.

References

Altenberg L. 1995. Genome growth and the evolution of the genotype-phenotype map. In: Banzhaf W, Eeckman FH, editors. Evolution and Biocomputation: Computational Models of Evolution. Lecture Notes in Computer Science vol. 899. Berlin: Springer-Verlag. p 205–59.

Behe MJ. 1996. Darwin's Black Box: The Biochemical Challenge to Evolution. New York: Free Press.

Dawkins R. 1996. The Blind Watchmaker: Why the Evidence of Evolution Reveals a Universe Without Design. New York: WW Norton.

Dembski WA. 1998. The Design Inference: Eliminating Chance through Small Probabilities. Cambridge: Cambridge University Press.

Dembski WA. 2002. No Free Lunch: Why Specified Complexity Cannot be Purchased Without Intelligence. Lanham (MD): Rowman and Littlefield Publishers.

Dembski WA. 2004. The Design Revolution: Answering the Toughest Questions about Intelligent Design. Downer's Grove (IL): InterVarsity Press.

Edis T. 2004. Chance and necessity — and intelligent design? In: Young M, Edis T, editors. Why Intelligent Design Fails: A Scientific Critique of the New Creationism. New Brunswick (NJ): Rutgers University Press. p 139–52.

Elsberry WR, Shallit J. 2003. Information theory, evolutionary computation, and Dembski's complex specified information. Available on-line at <http://www.talkreason.org/

Felsenstein J. 1978. Macroevolution in a model ecosystem. American Naturalist 112 (983):177–195.

Godfrey-Smith P. 2001. Information and the argument from design. In: Pennock RT, editor. Intelligent Design Creationism and its Critics: Philosophical, Theological, and Scientific Perspectives. Cambridge (MA): MIT Press. p 575–596.

Häggström O. 2007. Intelligent design and the NFL theorems. Biology and Philosophy 23: 217–230.

Klein J. 2002. Breve: A 3D simulation environment for multi-agent simulations and artificial life. Available on-line at <http://www.spiderland.org/

Meyer SC. 2006 Jan 28. Intelligent design is not creationism. Daily Telegraph. Available on-line at <http://www.telegraph.co.uk/

Orr HA. 2005 May 30. Devolution: Why intelligent design isn't. The New Yorker. Available on-line at <http://www.newyorker.com/

Perakh M. 2004a. Unintelligent Design. Amherst (NY): Prometheus Books.

Perakh M. 2004b. There is a free lunch after all: William Dembski's wrong answers to irrelevant questions. In: Young M, Edis T, editors. Why Intelligent Design Fails: A Scientific Critique of the New Creationism. New Brunswick (NJ): Rutgers University Press. p 153–71.

Rosenhouse J. 2002. Probability, optimization theory, and evolution [review of William Dembski's No Free Lunch]. Evolution 56 (8): 1721–1722.

Schneider TD. 2001. Rebuttal to William A. Dembski's posting and to his book "No Free Lunch". Available on-line at <http://www.lecb.ncifcrf.

Schneider TD. 2002. Dissecting Dembski's "complex specified information". Available on-line at <http://www.lecb.ncifcrf.

Shallit J. 2002. Review of No Free Lunch: Why Specified Complexity Cannot Be Purchased Without Intelligence, by William Dembski. BioSystems 66 (1): 93-99. Available on-line at <http://www.cs.uwaterloo.ca/

Shallit J, Elberry WR. 2004. Playing games with probability: Dembski's complex specified information. In: Young M, Edis T, editors. Why Intelligent Design Fails: A Scientific Critique of the New Creationism. New Brunswick (NJ): Rutgers University Press. p 121–138.

Sims K. 1994a. Evolving virtual creatures. Computer Graphics (Siggraph '94 Proceedings), July: 15–22.

Sims K. 1994b. Evolving 3D morphology and behavior by competition. In: Brooks RA, Maes P, editors. Artificial Life IV Proceedings. Cambridge (MA): MIT Press. p 28–39.

Sims K. 1994c. Evolved virtual creatures. Available on-line at <http://www.genarts.com/

Tellgren E. 2002. On Dembski's law of conservation of information. Available on-line at <http://www.talkreason.org/articles/dembski_LCI.pdf>. Last accessed September 7, 2007.

Tellgren E. 2005. Free noodle soup. Available on-line at <http://www.talkreason.org/articles/nfl_gavrilets6.pdf>. Last accessed April 15, 2007.

Wein R. 2002. Not a free lunch but a box of chocolates: A critique of William Dembski's book No Free Lunch. Available on-line at <http://www.talkorigins.org/

Wilkins JS, Elsberry WR. 2001. The advantages of theft over toil: The design inference and arguing from ignorance. Biology and Philosophy 16 (5): 711–724.

Wolpert DH, Macready WG. 1997. No free lunch theorems for optimization. IEEE Transactions on Evolutionary Computation 1 (1): 67–82.

Wolpert D. 2003. Review of No Free Lunch: Why specified complexity cannot be purchased without intelligence. Mathematical Reviews MR1884094 (2003b:00012). Also available at <http://www.talkreason.org/

Title: Has Natural Selection Been Refuted? The Arguments of William Dembski Author(s): Joe Felsenstein Volume: 27 Issue: 3–4 Year: 2007 Date: May–August Page(s): 20–26